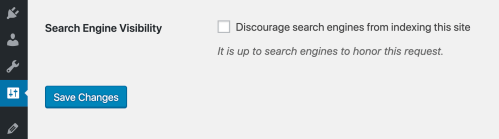

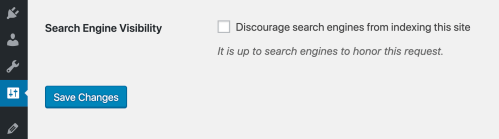

WordPress is changing the method it uses to prevent search engines from indexing sites. Previously, if a user checked the “Discourage search engines from indexing this site” option in a site’s Settings > Reading screen, WordPress would add Disallow: / to the robots.txt file. This would prevent crawling but did not always prevent sites from showing up in search results.

As of 5.3, WordPress will drop the robots.txt method in favor of adding an updated robots meta tag to prevent the site from being listed in search engines: <meta name='robots' content='noindex,nofollow' />. The meta tag offers a more reliable way of preventing indexing and subsequent crawling.

When checking the setting to discourage search engines from indexing a site, users are often looking for a way to hide their sites, but the setting does not always work as they expected. Jono Alderson summarized the problem and the proposed solution in a comment on the trac ticket that brought about the changes:

The Reading setting infers that it’s intended to prevent search engines from indexing the content, rather than from crawling it. However, the presence of the robots disallow rule prevents search engines from ever discovering the

noindexdirective, and thus they may index ‘fragments’ (where the page is indexed without content).2) Google recently announced that they’re making efforts to prevent fragment indexing. However, until this exists (and I’m not sure it will; it’s still a necessary/correct solution sometimes), we should solve for current behaviors. Let’s remove the

robots.txtdisallow rule, and allow Google (and others) to crawl the site.

In the dev note announcing the change, Peter Wilson recommends that developers wanting to exclude development sites from being indexed by search engines should include the HTTP Header X-Robots-Tag: noindex, nofollow when serving all assets for the site, including images, PDFs, video, and other assets.

Original: wptavern.com